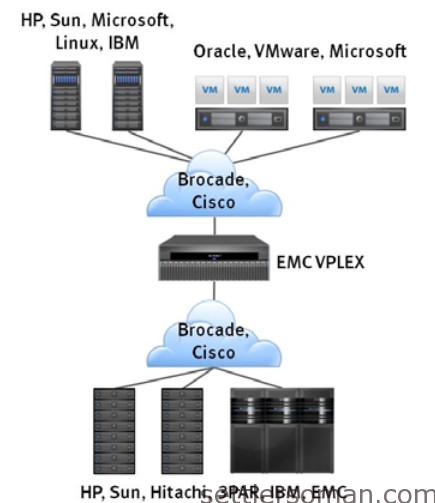

In this article we talk about storage virtualization solution - EMC VPLEX. VPLEX supports both EMC storage arrays from other storage vendors, such as HDS, HP, and IBM. VPLEX supports operating systems including both physical and virtual server environments with VMware ESX and Microsoft Hyper-V. VPLEX supports network fabrics from Brocade and Cisco, including legacy McData SANs.

VPLEX Architecture

VPLEX is deployed as a cluster consisting of one, two, or four engines (each containing two directors), and a management server. A dual-engine or quad-engine cluster also contains a pair of Fibre Channel switches for communication between directors. Each engine is protected by a standby power supply (SPS), and each Fibre Channel switch gets its power through an uninterruptible power supply (UPS). (In a dual-engine or quad-engine cluster, the management server also gets power from a UPS.) The management server has a public Ethernet port, which provides cluster management services when connected to the customer network. This server provides the management interfaces to VPLEX — hosting the VPLEX web server process that serves the VPLEX GUI and REST-based web services interface, as well as the command line interface (CLI) service. In the VPLEX Metro and VPLEX Geo configurations, the VPLEX Management Consoles of each cluster are inter-connected using a virtual private network (VPN) that allows for remote cluster management from a local VPLEX Management Console. When the system is deployed with a VPLEX Witness, the VPN is extended to include the Witness as well.

EMC VPLEX provides three types of configuration:

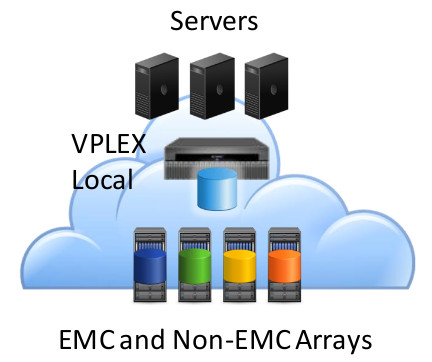

- VPLEX Local - a VPLEX cluster within a single data center.

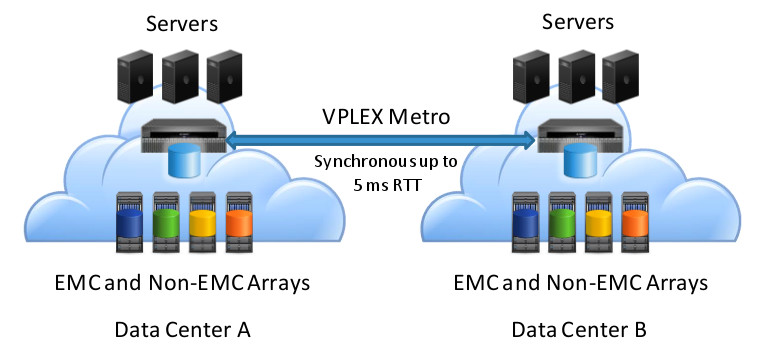

- VPLEX Metro - two VPLEX clusters located within or across multiple data centers separated by up to 5ms of RTT latency. VPLEX Metro systems are often deployed to span between two data centers that are close together (~100 km), but they can also be deployed within a single data center for applications requiring a high degree of local

availability.

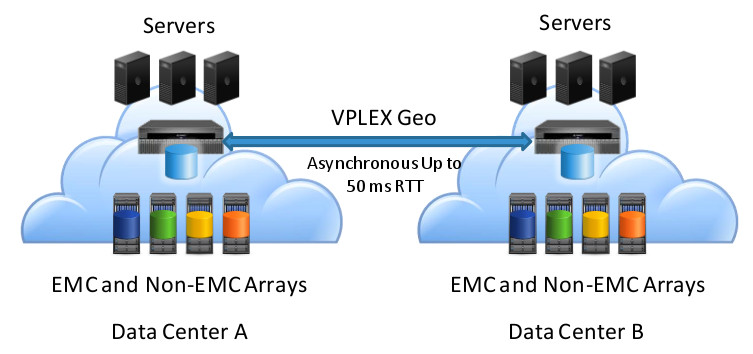

- VPLEX Geo - two VPLEX clusters located within or across multiple data centers separated by up to 50 ms of RTT latency. VPLEX Geo uses an IP-based protocol over the Ethernet WAN COM link that connects the two VPLEX clusters. This protocol is built on top of the UDP Data Transfer (UDT) protocol, which runs on top of the Layer 3 User Datagram Protocol (UDP).

VPLEX Metro and VPLEX Geo systems optionally include a Witness. The Witness is implemented as a virtual machine and is deployed in a separate fault domain from two VPLEX clusters. The Witness is used to improve application availability in the presence of site failures and inter-cluster communication loss.

The VPLEX operating system is GeoSynchrony (currently version 5.3) designed for highly

available, robust operation in geographically distributed environments.

VPLEX global distributed cache and modes

VPLEX Local and Metro both use the write-through cache mode. When a write request is received from a host to a virtual volume, the data is written through to the back-end storage volume(s) that map to the volume. When the array(s) acknowledge this data, an acknowledgement is then sent back from VPLEX to the host indicating a successful write.

VPLEX Geo uses write-back caching to achieve data durability without requiring synchronous operation. In this cache mode VPLEX accepts host writes into cache and places a protection copy in the memory of another local director before acknowledging the data to the host. The data is then sent asynchronously to the back-end storage arrays. Cache vaulting logic within VPLEX stores any unwritten cache data onto local SSD storage in the event of a power failure.

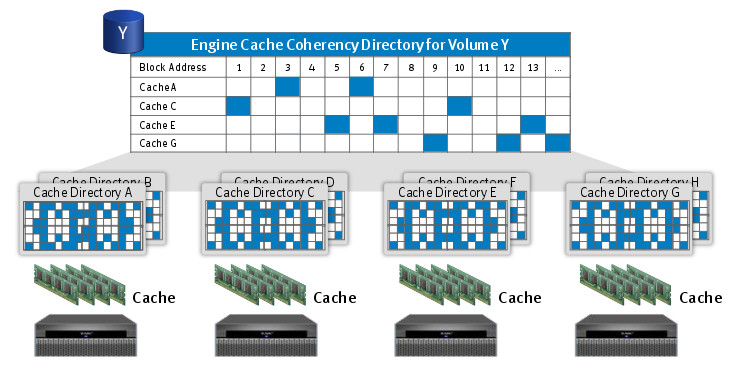

The individual memory systems of each VPLEX director are combined to form the VPLEX distributed cache. Data structures within these memories in combination with distributed algorithms achieve the coherency and consistency guarantees provided by VPLEX virtual storage. This guarantee ensures that the I/O behavior observed by hosts accessing VPLEX storage is consistent with the behavior of a traditional disk. Any director within a cluster is able to service an I/O request for a virtual volume served by that cluster. Each director within a cluster is exposed to the same set of physical storage volumes from the back-end arrays and has the same virtual-to-physical storage mapping metadata for its volumes. The distributed design extends across VPLEX Metro and Geo systems to provided cache coherency and consistency for the global system. This ensures that a host accesses

to a distributed volume always receive the most recent consistent data for that

volume.

Distributed virtual volume between data centers

VPLEX provides a distributed virtual volume - one of the most useful things for VMware Metro Storage Cluster. The virtual volume is based on distributed mirroring that protects the data of a virtual volume by mirroring it between the two VPLEX clusters:

- VPLEX Metro - based on Distributed RAID 1 volumes with write-through caching

- VPLEX Geo - based on Distributed RAID 1 volumes with write-back caching

Sharing data between sites (data centers)

VPLEX provides that the same data can be accessible to all users at all times — even if they are at different sites. All changes made in one site shows up right away at the other site.

Examples of EMC VPLEX use cases

- VMware Metro Storage Cluster - with VPLEX Metro, you can create a stretched VMware HA cluster and with vMotion/DRS you can balance the cluster between data centers. Incase of failure, VMware HA restarts VMs in the second data center.

- Collaboration - applications in one data center need to access data in the other

data center. - Data Migration - moving applications and data across different storage installations — within the same data center, across a campus, or within a geographical region.

For more information please follow EMC VPLEX 5.0 Architecture Guide.