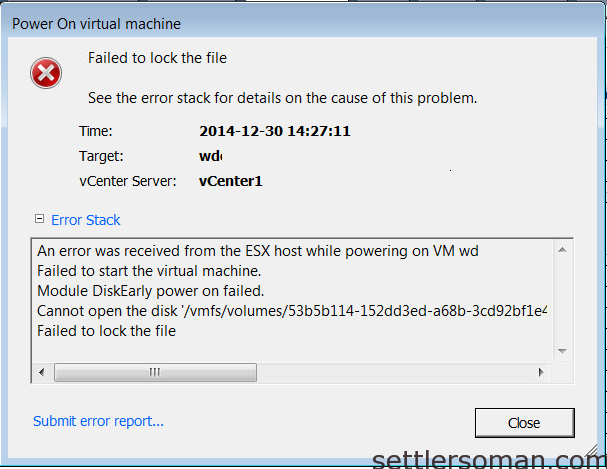

Recently my customer has faced a problem with VM which was powered off by NetBackup snapshot and the VM was not possible to be powered on because of error: Failed to lock the file.

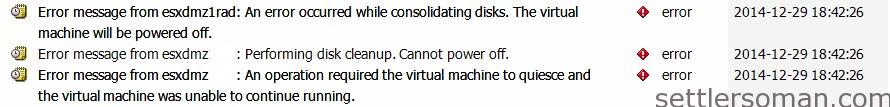

Some errors confirming that VM was powered off during creating NetBackup snapshots:

As shown on above screenshot, there were 2 errors. Let's focus on them because these errors are not seldom:

An operation required the virtual machine to quiesce and the virtual machine was unable to continue running.

The issue can occurs because:

- the I/O in the virtual machine is high and the quiescing operation is unable to flush all the data to disk, while further I/O is created,

- out of date VM Tools,

- sometimes, backup agent installed inside VM

An error occurred while consolidating disks. The virtual machine will be powered off.

Following VMware KB:

This issue occurs when one of the files required by the virtual machine has been opened by another application. During a Create or Delete Snapshot operation while a virtual machine is running, all the disk files are momentarily closed and reopened. During this window, the files could be opened by another virtual machine, management process, or third-party utility. If that application creates and maintains a lock on the required disk files, the virtual machine cannot reopen the file and resume running.

So what to do when in sequence of above errors, we can't power on VM because of file locks? There are some possibilities to solve the problem depending on datastore location:

If you face problem with VM located on NFS datastore, you can follow below steps:

-

Log in to the ESX host using an SSH client.

-

Navigate to the directory where the virtual machine resides.

- Determine the name of the NFS lock (.lck) with the command: ls -la *lck

- Remove the file with the command: rm lck_file

VMFS volumes do not have .lck files. The locking mechanism for VMFS volumes is handled within VMFS metadata on the volume so if you face problem with VM located on VMFS datastore, you should follow below steps:

- Migrate the virtual machine to the host it was last known to be running on and attempt to power on - sometimes it helpes, specially with DRS enabled environment where a VM can be registered in diffrent ESXi.

- If earlier point is unsuccessful, continue to attempt a power on of the virtual machine on other hosts in the cluster. In meantime, migrate all VMs from original ESXi host (last known where VM to be running on) and reboot th host. If you have only one ESX/ESXi host or do not have the ability to vMotion or migrate virtual machines, you must schedule downtime for the affected virtual machines prior to rebooting.

- If still unsuccesfull, migrate VM back to the host it was last known to be running and try to power on VM.

Generally, above steps are sufficient to solve the problem. However, if you still face the problem you can follow further troubleshooing steps described in VMware KB.