Some weeks ago I decided to use VMware vCenter Distributed Power Management (DPM) in the lab. In this post I will cover the following topics:

- vCenter Distributed Power Management (DPM) overview and requirements.

- How to configure DPM?

- Some advanced settings that I used in the lab 🙂

Distributed Power Management (DPM) overview

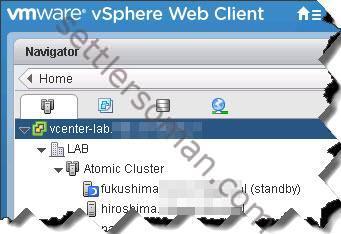

DPM is a DRS "extension" that helps to save power (so we can be "green"). It recommends powering off or on ESX hosts when either CPU or memory resource utilization decreases or increases. VMware DPM also takes into consideration VMware HA settings or user-specifies constraints. It means that e.g. if our HA tolerates one host failure, DPM will leave at least two ESXi host powered on. How does DPM contact with ESXi host? It uses wake-on-LAN (WoL) packets or these out-of-band methods: Intelligent Platform Management Interface (IPMI) or HP Integrated Lights-Out (iLO) technology. I will show how to configure DPM using HP iLO later in this post. When DPM powers off ESXi host, that host is marked as Standby (standby mode).

DPM configuration steps

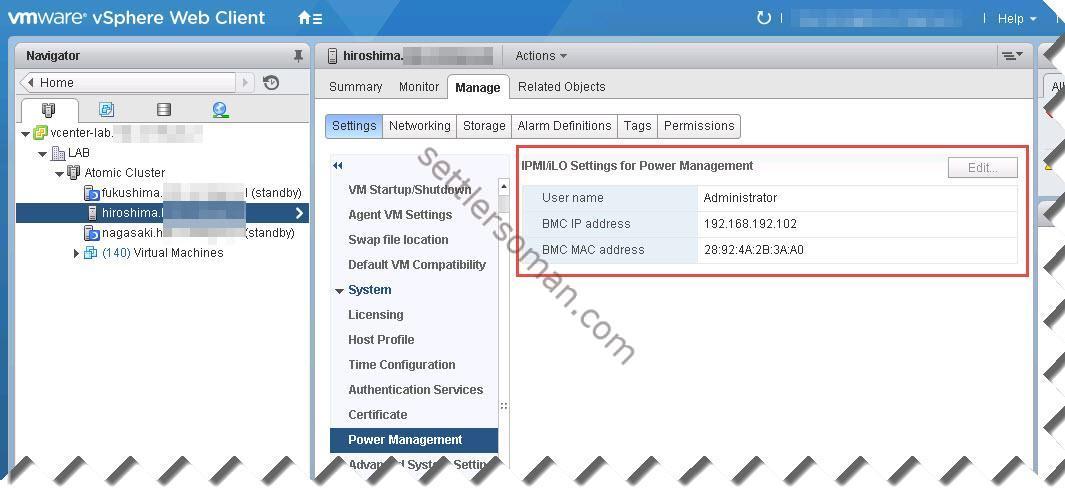

Before enabling Power Management for hosts in a vSphere cluster, you need to know username and password, IP and MAC Address of the iLO interface. Then please follow the below steps:

- Browse to each host in the vSphere Web Client.

- Click the Manage tab and click Settings.

- Under System, click Power Management.

- Click Edit.

- Enter the username, password, IP and MAC of iLO (in my case).

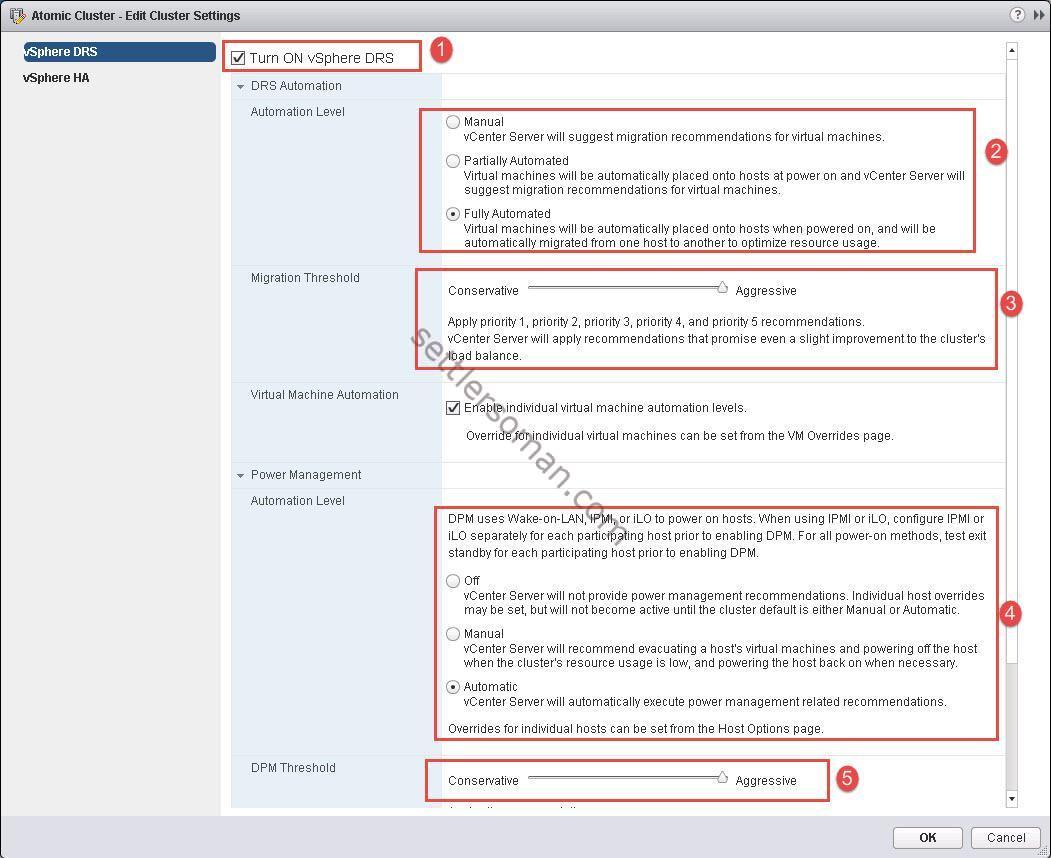

Now we can enable DPM. Please follow the below steps:

- Click on vSphere Cluster in the vSphere Web Client.

- Click the Manage tab and click Settings.

- Under Services, click vSphere DRS.

- Click Edit.

- Check Turn ON vSphere DRS (1)and set up DRS Automation Level (2) and Migration Threshold (3).

- Set up Power Management Automation Level (4) and DPM Threshold (5).

Note: The above figure presents settings configured in my lab. You should consider lower levels in production.

DRS advanced settings for DPM

There are some advanced settings shown in the below table:

| Option Name | Default Value | Value Range |

|---|---|---|

| DemandCapacityRatioTarget Desc: Utilization target for each ESX host. | 63% | 43-90 |

| DemandCapacityRatioToleranceHost Desc: Tolerance around the utilization target for each ESX host. Used to calculate the utilization range | 18% | 10-40 |

| VmDemandHistorySecsHostOn Desc: Period of demand history evaluated for ESX host power-on recommendations. | 300s | 0-3600 |

| VmDemandHistorySecsHostOff Desc: Period of demand history evaluated for ESX host power-off “recommendations. | 2400s | 0-3600 |

| EnablePowerPerformance Desc: Set to 1 to enable host power-off cost-benefit analysis. Set to 0 to disable it. | 1 | 0-1 |

| PowerPerformanceHistorySecs Desc: Period of demand history evaluated for power-off cost-benefit recommendations. | 3600s | 0-3600 |

| HostsMinUptimeSecs Desc: Minimum uptime of all hosts before VMware DPM will consider any host as a power-off candidate. | 600s | 0-max |

| MinPoweredOnCpuCapacity Desc: Minimum amount of powered-on CPU capacity maintained by VMware DPM. | 1Mhz | 0-max |

| MinPoweredOnMemCapacity Desc: Minimum amount of powered-on memory capacity maintained by VMware DPM. | 1MB | 0-max |

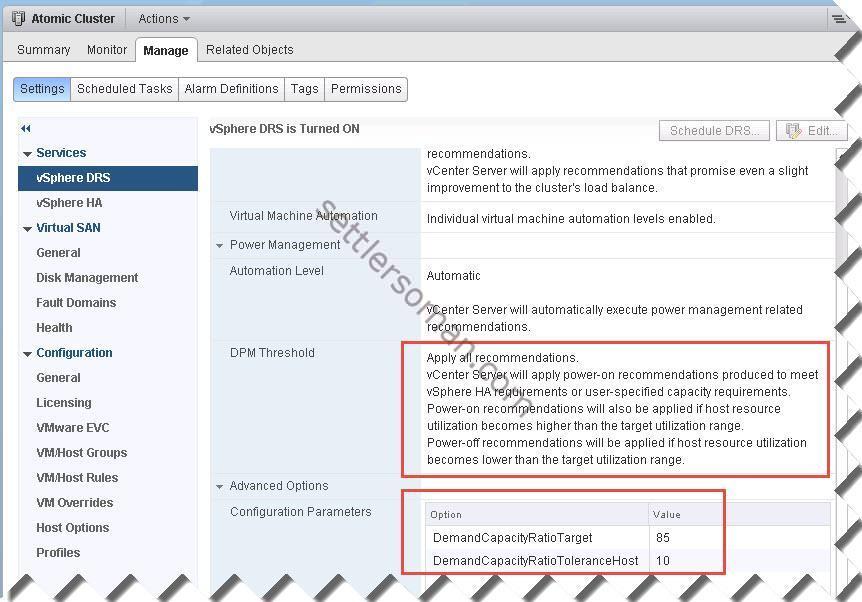

There are two the most important settings (in my case):

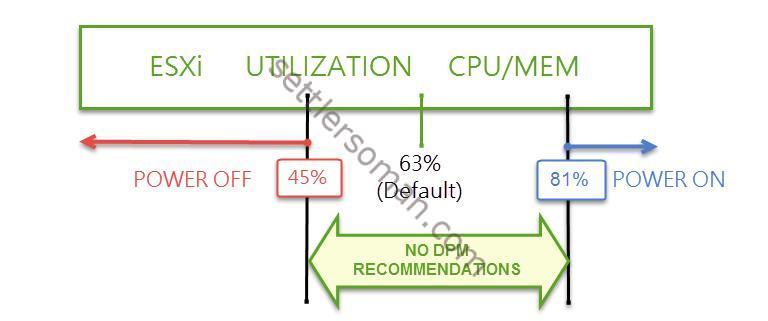

- DemandCapacityRatioTarget = the utilization target of an ESX/ESXi host. By default, this is set to 63%.

- DemandCapacityRatioToleranceHost = the range around the utilization target for a host. By default, this is set to 18%.

There is a formula about utilization of ESXi host:

Utilization range = DemandCapacityRatioTarget +/- DemandCapacityRatioToleranceHost

As I mentioned above, by default DPM attempts to keep the ESXi resource utilization between 45% and 81%. If CPU or memory utilization drops below 45% on all hosts, DPM attempts (using "what-if mode) to power off a host.

DPM can power off additional hosts until at least one host's CPU or memory utilization is above 45%. If CPU or memory utilization moves above 81% on all hosts, DPM attempts to power on a host to better distribute the load. DPM evaluates resource demand for calculating power-on operations over a five minute period and calculating power-off operations is calculated over a 40 minutes.

DPM/DRS settings in my lab

We bought a new server so I decided to tune DPM a little bit:

Changing default value of Demand Capacity Ratio

- DemandCapacityRatioTarget --> changed to 85

- DemandCapacityRatioToleranceHost --> changed to 10

Now, my ESXi host can be utilized max 95% (85%+10% of tolerance) and still DPM will not power on the next ESXi host.

DPM/DRS configuration - level 5 (aggressive)

I configured DPM and DRS to apply automatically all recommendations (Level 5). Please remember if the VMware DRS migration recommendation threshold is set to priority 1, VMware DPM does not recommend any host power-off operations. In this level, VMware DRS generates vMotion recommendations only to address constraint violations and not to rebalance virtual machines across hosts in the cluster.

Disabling VMware HA

I decided to disable VMware HA in my lab as I want to save power maximally so I would like to have min/max one powered on ESXi host (in the worst case, 95% utilized). DPM always ensure that at least one host will be running within the DPM-enabled cluster but when you enable VMware HA, DPM will keep at least two ESXi hosts powered on (I tested it). If you enable VMware HA Admission Control, DPM takes into consideration that and can leave minimum two or more powered on ESXi hosts (depending on vSphere cluster failover settings).

Conclusion

Distributed Power Management (DPM) is a useful solution for production ESXi hosts but also Test or Lab environment as well 🙂